Memory-based understanding

Direct Memory Access Parsing (DMAP) is a model of natural language understanding as memory search, rather than linguistic analysis. That means that the goal of DMAP is not to construct a parse tree or meaning structure. Its primary goal is to identify what prior knowledge a text is referring to. Assume a knowledge base with concepts for people, objects, actions, and instances of those concepts, i.e., particular people and object and episodes of events involving them. When DMAP is given an input like "Clyde ate peanuts", and Clyde the elephant is in memory, as is a previously seen event where Clyde ate peanuts, then DMAP should retrieve that event as a possible reference. If no specific instances are found, DMAP should have collected the concepts needed to construct instances to add to memory. DMAP was inspired by a very early system called the Teachable Language Comprehender.

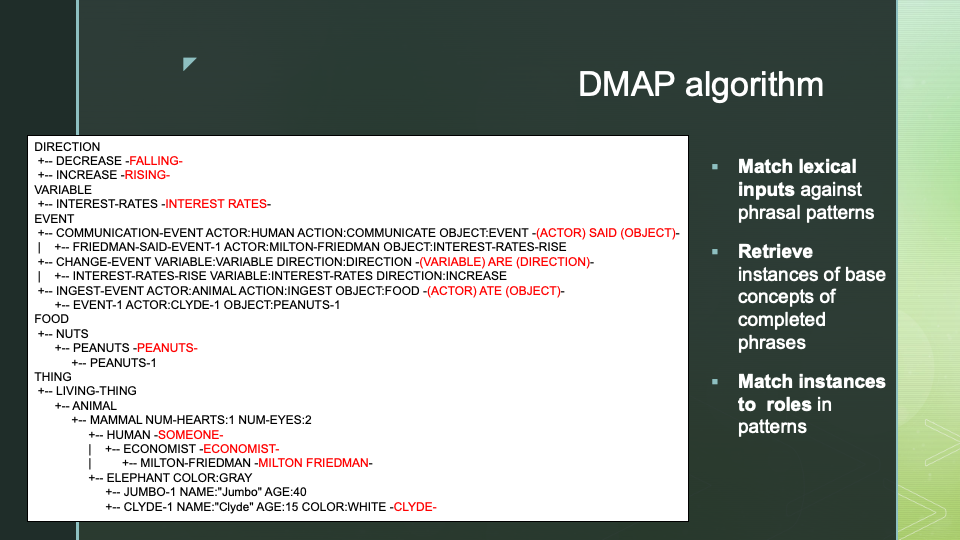

Assume our memory has the following curious mix of MOPs from various old AI examples. There are various kinds of objects, both concrete, like people, and abstract, like an economic variable or a direction of change.

economistwith the abstractionhumanhumanwith the abstractionmammalelephantwith the abstractionmammalmammalwith the abstractionanimalpeanutswith the abstractionnutsnutswith the abstractionsfoodandplant-

interest-rateswith the abstractionvariable -

increasewith the abstractiondirection

There are events in which these objects play roles. Note: here we sometimes use the same name for a role and the general concept that fills it. If we wanted to have concept hierarchies for roles, we would need to make the names distinct with a prefix or suffix.

-

ingest-eventwith the abstractioneventand the slots((actor animal) (action ingest) (object food)) -

communication-eventwith the abstractioneventand the slots((actor human) (action communicate) (object event)) -

change-eventwith the abstractioneventand the slots((variable variable) (direction direction))

And there are existing instances in memory of the above concepts, including events.

milton-friedmanwith the abstractioneconomistclyde-1with the abstractionelephantpeanuts-1with the abstractionpeanuts-

interest-rates-risewith the abstractionchange-eventand the slots((variable interest-rates) (direction increase)) -

friedman-said-event-1with the abstractioncommunication-eventand the slots((actor milton-friedman) (object interest-rates-rise))

ingest-event-1 with the abstraction ingest-event

and the slots ((actor clyde-1) (object peanuts-1))

The starting point is to associate phrasal patterns with concepts. The concept a phrase is associated with is called the base concept of the phrase. A phrasal pattern has one or more terms. Each term in a phrase is either

- a lexical item, such as

clydeorpeanuts, or - a role in the base MOP, such as

actororobject

In this little memory, we might have these phrasal patterns:

(elephant)onelephant(clyde)onclyde-1(milton friedman)onmilton-friedman(interest rates)oninterest-ratesriseonincrease(peanuts)onpeanuts((variable) are (direction))onchange-event((actor) ate (object))oningest-event((actor) said (object))oncommunication-event

The second last phrasal pattern says that an ingest-event can be referenced

by a reference to a possible actor of the event, i.e., some animal, followed

by the lexical item

"ate", followed by a reference to a possible object of the event, i.e., some food.

We put roles in phrasal patterns inside lists to keep them distinct from lexical items, and to allow

phrasal patterns with

role paths, i.e., a list of roles, leading from the base to a subpart,

e.g., (object name).

A MOP can have many phrasal patterns attached. A phrasal pattern can appear on many MOPs.

The algorithm outlined below is designed to err on the side of finding as many specific references as possible, leaving it to other memory processes to settle on the most consistent and likely. It is the exact opposite of an approach that rules out any deviation from grammatical correctness. This means the algorithm

- Embraces ambiguity, collecting all possible answers rather than picking one

-

Ignores unknown inputs, i.e., a phrase

x y zis allowed to match input elements in that order but not necessarily adjacent - Identifies existing relevant instances in memory as early as possible in processing

The DMAP algorithm

The DMAP algorithm maintains a parse state. This state tracks what DMAP has been recently seen and what it is ready to see. Initially DMAP is ready to see any initial item in any phrase in memory.

DMAP takes inputs left to right. If it matches the start of any phrase that DMAP is ready for,

DMAP adds the remainder of that phrase to the parse state.

For example, if (milton friedman) is a phrase, and milton

is seen, a lexical match occurs, and the

remaining phrase (friedman) is added to the parse state.

Note: the original phrases are not removed. They remain ready to match future inputs.

In a more fleshed-out system, lexical matching would handle things like tense and number.

When all the items in a phrase have been matched, phrase completion occurs, which triggers instance retrieval, where DMAP finds all instances of the base concept of the completed phrase.

Now DMAP looks for role matches between any retrieved instance

and the head of any phrase in the parse state.

An input matches a role at the head of phrase if the input

is an instance of the filler of that role in

the base concept of the phrase. For example, clyde-1 matches

(actor) in the phrase

((actor) ate (object)) because the filler of actor

in the base concept ingest-event is animal and clyde-1

is an animal.

When an instance matches a role in a phrase, DMAP adds the role and instance to a list

of slots for the matched phrase. In the example the slot

(actor clyde-1) would be attached to the new phrase

(ate (object)).

When a phrase with roles is completed, the slots are used to constrain

the instances found. For example, if the phrase ((actor) ate (object))

has the slots ((actor clyde-1) (object peanuts-1)) then DMAP

will try to find existing instances of an event where Clyde ate peanuts.

The completion of phrases can lead to a cascade of role matching and

further phrase matching. The following animation shows what happens

when (milton friedman says interest rates are rising. Note

in particular how many things happen when rising is read.

Implementation pitfalls

There are some subtleties to be aware of. It's important to let phrases match input sequences in as many ways as possible, skipping some input items if necessary. But this can easily lead to infinite loops.

-

One source of infinite loops comes from not distinguishing lexical

items with concepts with same names, such as

peanuts. One solution is to put all lexical items in a separate package. - Another source of infinite loops comes from MOPs that have slots containing very abstract concepts. For example, the object of a communication event can be an event. This could lead to a circular loop where the communication event just recognized becomes its own object. The solution here is to keep track of when phrase matches begin and end in the input stream, and make sure segments obey the expected order of input.

Getting Output from DMAP

Real life language understanding with an agent with episodic memory is not a stateless process. It's not like calling a function and getting an answer. We hear, we understand, we remember, we learn, in a continual process of memory updating.

For testing DMAP parsers in the exercises, it's useful to define a function that scans the parse state and identifies all the concepts that have been referred to recently. The parse state can be seen as a short-term memory of recent language understanding activity.