| Associate Professor of Computer Science Departments of Electrical Engineering/Computer Science and Radio/Television/Film Director, Division of Graphics and Interactive Media, EECS Department Director, Animate Arts Program |

|

| Email: | ian@northwestern.edu |

| Blogs: |

http://ianhorswill.wordpress.com (AI) http://twigblog.wordpress.com (procedural animation, web comic) |

| Office: | EECS Department, Northwestern University Ford Building, room 3-321 2133 Sheridan Road Evanston, IL 60208 |

| Voice | +1-847-467-1256 |

| FAX: | +1-847-491-5258 |

My work lies roughly within the areas of artificial intelligence and interactive art and entertainment. My AI research focuses on control systems for autonomous agents: how does an agent decide from moment to moment what to do, based on an ongoing stream of sensor data and a continually varying set of goals and entanglements with the world. My past work focused on robotics and computer vision, but my more recent work centers around the the modeling and simulation of emotion, personality, and social behavior for virtual characters for games and interactive narrative. Interactive narrative is particularly interesting for AI because it provides a natural domain in which to examine aspects of human personality and behavior that one would not want to duplicate in service robots, such as aggression and depresssion.

Within interactive art and entertainment, I'm interested in exploring alternative genres and interaction modes that can expand the medium. Interactive narrative is particularly interesting because it offers the promise of an aesthetics that emphasizes experiences of identification, empathy, and affiliation, over mastery, frustration, and control. However, making a piece that provides those experiences in practice is a difficult task that involves a number of interesting research problems in computer science and cognitive science.

I also dabble in programming language implementation and work with the LocalStyle arts collective.

The Animate Arts Program is a joint project of Northwestern's schools of Engineering, Communications, Arts and Sciences, and Music. We offer an integrated, team-taught curriculum in the fundamentals of interactive multimedia arts and entertainment systems, including visual design, sound design, narrative, film and art theory, and of course programming and computer graphics. For information on our curriculum and how to join the program, see the Animate Arts Wiki.

Recent work is bringing psychological and neuroscience models of emotion and personality closer together. These models, such as Gray and McNaughton's revised (2000) Reinforcement Sensitivity Theory, provide potential biological explanations both for 2-factor trait models of personality, as well as state disorders such as major depressive disorder and anxiety disorder, and panic disorder. Although not phrased in computational terms, RST is sufficiently precise to be modeled computationally. In joint work with with Karl Fua, Andrew Ortony and Bill Revelle, we are working to model and implement RST in interactive virtual characters and to apply the model to schoolyard social interactions.

One of my current interests is in the ways in which human behavior is built atop a substrate of more basic behaviors that we share with other mammals. A particularly good example of this is the attachment behavior system, which has been well studied in child psychology. Attachment behavior begins as a relatively simple innate system that responds to low-level sensory cues such as parental proximity, eye contract, and smiling. But as the child develops, the attachment system become increasingly intertwined with higher-level reasoning processes. Moreover, both it remains active throughout the child's life, and forms part of the basis for adult romantic love. Attachment is therefore an ideal model system to study when trying to understand the interactions of the basic motivational and behavioral systems we share with other mammals and the specially developed reasoning processes unique to humans.

You can find a simple simulation here (press Tab to switch between character views and h to toggle debugging information) and a position paper on why attachment is an interesting model system here. This is based on Ainsworth's safe home base phenomenon, in which a young child explores the environment by making repeated excursions from the caregiver. Increasing distance from the parent increases anxiety, which eventually triggers attachment behaviors such as establishing eye contact, closing with the parent, and achieving physical contact (hugging).

Most character animation is done either by hand or by instrumenting actors for motion capture, recording their movements, and then playing them back later. These techniques, although expensive, work well for films, but are expensive to apply for interactive applications such as video games where designers need to anticipate and digitize in advance every possible motion a character might need to make.

Procedural animation uses software to compute the motions at run-time, allowing a wider range of motions. In this work, I've combined a simple ragdoll physics engine with an animation system built on the principles of behavior-based robotics. The result is fluid, evocative, and believable motion that allows physical interactions between characters such as holding hands, hugging, grappling, and fighting.

Twig is free, open-source software. You you can download the current demos and source release, as well as copies of the Twig papers, from the Twig blog.

I've been trying to test out features of Twig by writing short little pieces that use them and publishing the result as a kind of "web comic." It's published at (very) irregular intervals depending on my travel and teaching schedules, but should see a lot more activity come this summer. As of this writing, all the episodes are scripted (since scripting was the original feature I wanted to test), but my intention is to have subsequent episodes be at least partially interactive.

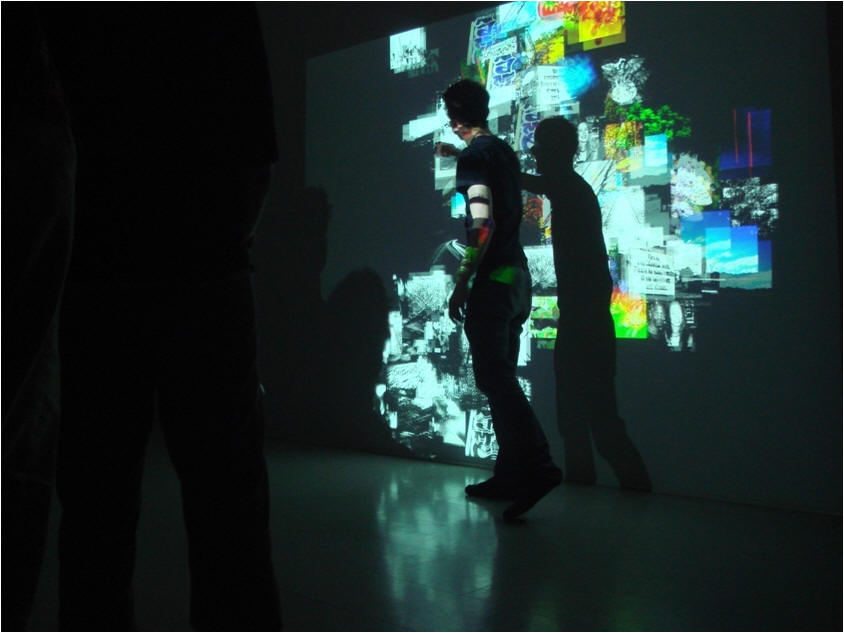

Adrian

Ledda, 2005

Meta is a free, Scheme-like programming language that provides very good integration with the Microsoft .NET system. For beginning programmers, Meta provides a high-quality programming environment that allows beginning programming students to write relatively sophisticated programs using a relatively unintimidating development environment. Its provide a slow (its interpreted) but otherwise industrial-strength lisp system with extremely good .NET integration. Meta is used in the Animate Arts curriculum as well as in some sections of EECS-111.

Cerebus is a "self-demoing" robot that combines sensory-motor control with simple reflective knowledge about its own capabilities. Cerebus is a behavior-based system that uses role-passing to implement efficient inference grounded automatically in sensory data. All inferences in Cerebus are updated continuously at sensor frame rates. Role-passing is also used to implement reification of and reflection on its own state and capabilities.

HIVEMind is an architecture for distributed robot control using a distributed form of role-passing. All robots broadcast their entire knowledge bases every second, providing all team members with direct access to the sensory information and inference of all other team members, in effect, a kind of "group mind." (No Borg jokes, please). The representation of the knowledge base is sufficiently compact that only approximately 1% of a standard radio LAN is used per robot.

The Sony Aibo (a.k.a. the "Sony dog") uses one of our vision algorithms for visual navigation. Here are some videos of tests in our lab on prototype Aibos:

Artificial intelligence conferences can be wrought with peril. Here is a video from demo night at AAAI-99 (30MB). No humans were harmed during the making of this motion picture. The dog, however, stripped a set-screw in one of the gear trains in its neck. (The grad students doing the demo also went into hiding for several weeks).

Kluge was the first robot that used role passing. Here it follows a simple command textual commands using role passing.

To say the least, art and entertainment have been historically undervalued in academic computer science. This is just silly. There are a lot of really interesting technical problems in art-tech, and there is even a lot of money in it; In the U.S., the entertainment budget is larger than the military budget. Most importantly, however, art-tech is devastatingly cool.

That said, I don't actually get to do much art at the moment. However, here are some cool things my grad students have done:

Many people know me for my computer vision work. However, I think of myself as an AI (artificial intelligence) researcher; I'm interested in how the mind works and how its structure is conditioned by the requirements of embodied activity in the world. That's academic-speak for "I want to understand how the practical needs of seeing and doing effect the design of thinking."

My current work involves the problem of integrating higher-level reasoning systems with lower-level sensory-motor systems on autonomous robots. The reasoning systems are typically based on a transaction-like model of computation in which different components of the agent interact communicate by reading and a shared database of assertions in some logical language. Sensory-motor systems are typically based on a circuit-like model of computation in which data streams in real-time through a series of finite-state or zero-state parallel processes, from the sensory inputs to the motors and other actuators.

While very powerful, the transaction-oriented model of computation is very difficult to keep in synchrony with the sensory-motor systems. As data streams through sensory-motor circuits, statements in the database must constantly be asserted, retracted, and reasserted so as to track the changing sensory data. Other statements whose justifications depend on the changing sensory assertions, must themselves be asserted and retracted, as must the statements that depend on the dependent assertions, and so on. An attractive alternative is to find ways of generalizing circuit computations to handle problems that previously required the transaction model. If successful, this promises to combine the responsiveness of sensory-motor circuits with much of the power of full-blown inference. It also provides a more appealing model of how cognition can be performed in humans, since the transaction model is difficult or impossible to implement efficiently in neural circuitry.

We've developed a set of techniques, called role passing, for compiling inference processes into feed-forward parallel networks that drive and are driven by a set of distributed sensory representations. The techniques, while limited, are extremely efficient: a rule base of 1000 rules can be run to deductive closure on every cycle of the system's decision loop at 100Hz or more using less than 1% of a typical CPU. In other words, any computations that can be phrased in this framework are effectively free. Since the techniques involve the use of linguistic roles as keys into short-term memory, they are make it relatively easy to build simple natural language parsers and generators that operate on these distributed representations. While not fluent, these systems still form useful instruction-following and question-answering interfaces.

We have also developed a compiler for a programming language called GRL (Generic Robot Language) that allows many of the traditional techniques of AI programming, such as list processing, the use of higher-order procedures, data-directed type dispatch, and reflection and reification, to be used in parallel networks of communicating finite-state machines.

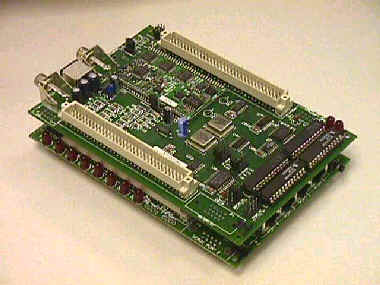

Sony Aibo prototype hardware

Sony Aibo prototype hardware