Summary:

I expected this project to be quite a bit of work. And it hasn't disappointed me yet. Fortunately, this week I managed to put in a lot of time, and it's beginning to pay off.- The base genetic programming engine is basically finished

now. (There may be a few things I have to tweak along the way,

but hopefully not.)

- Cloning, mutation, and crossover are fully implemented,

with sliders to control the frequency of each type of operation.

- Generations occur, and a fitness function is calculated.

- I succeeded in making it fairly modular, so that it shouldn't be hard to adapt it for all sorts of different models.

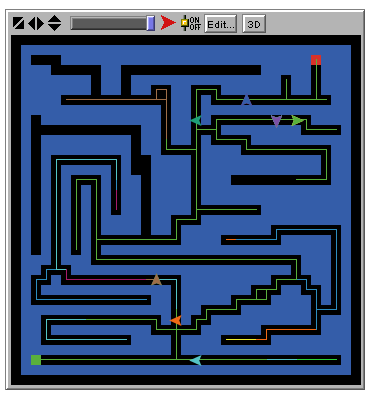

- The maze marcher model is starting to come together

now. (A picture of a beta run is shown at right).

(Maze

marchers try to find the goal!)